Overview

This case study showcases a comprehensive project aimed at enhancing the user experience in video content creation by leveraging data, machine learning, and AI. The study introduces a novel method for syncing audio with video through Natural Language Processing (NLP) to analyze text and find mood-based sound matches. This approach addresses the complex challenge of recognizing the context of video narratives, ultimately contributing to more immersive and emotionally resonant multimedia presentations.

Research Problem

Content creators face significant challenges in seamlessly syncing audio and video to evoke the desired mood and tone, hindered by time-consuming traditional methods, requiring expertise for efficient viewer engagement.

Existing methods often lack the emotional nuance required for seamless audio-video harmonization.

This project seeks to address the following research question:

"How can text analysis be used to enhance matching sounds with the mood observed in video content?"

Research Objectives

To create a user-friendly tool that automatically analyzes textual input to determine the mood of a video and suggests audio tracks that fit that mood, thereby streamlining the audio-video editing process.

Stakeholders: Content creators, marketers, video editors, sound editors, MDDD students, lecturers, supervisors, designers, marketers, engineers, clients, and customers.

Methodologies Used: Natural Language Processing (NLP), TF-IDF, Word2Vec, co-reflection, heuristic evaluations, peer testing, expert reviews, and iterative design.

Exploration & Findings

The exploration phase involved extensive research and experimentation with various models and methodologies:

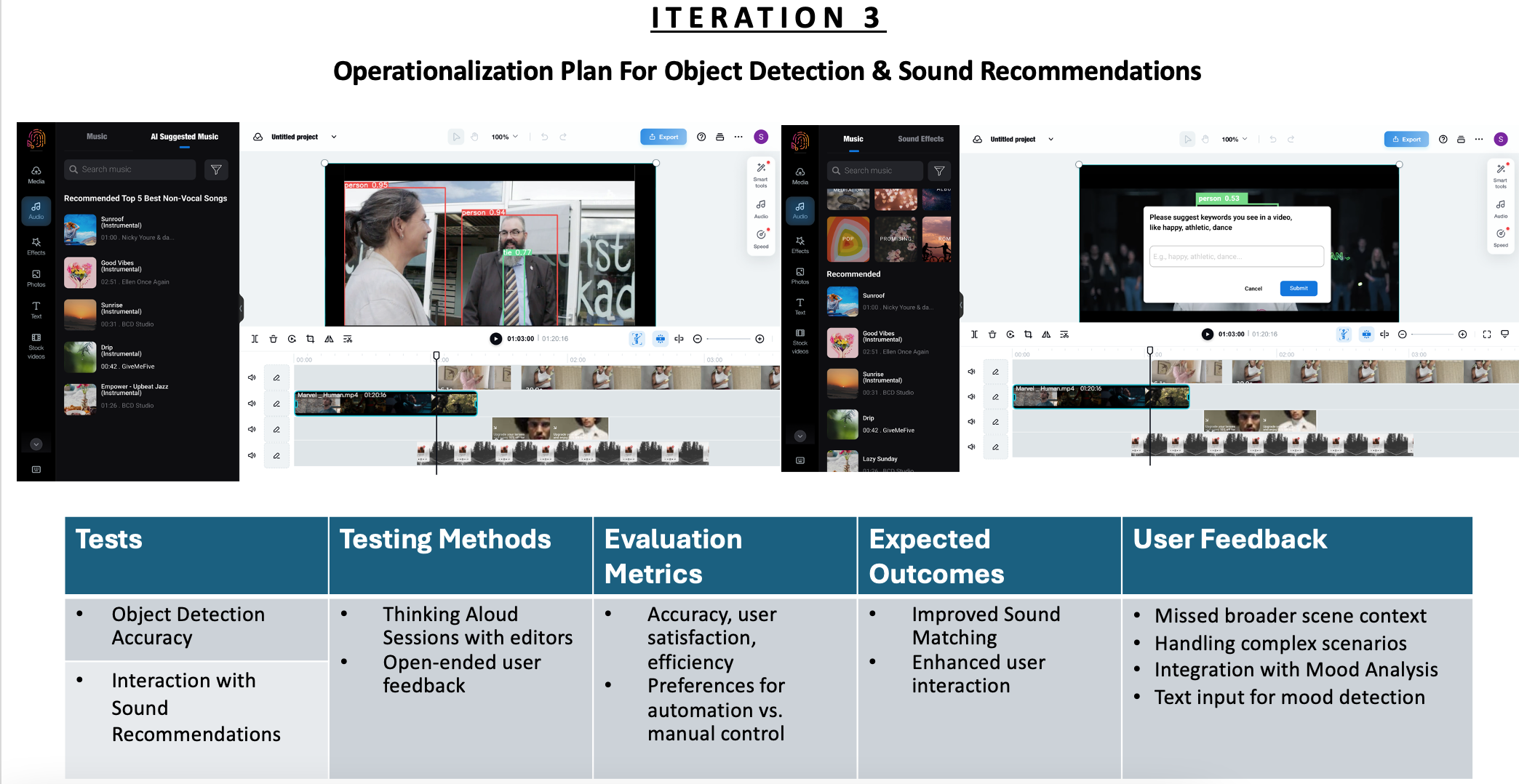

- Initial Attempts: Started with AI-driven object detection (YOLO) for sound alignment in video creation, which proved insufficient due to the lack of emotional connection.

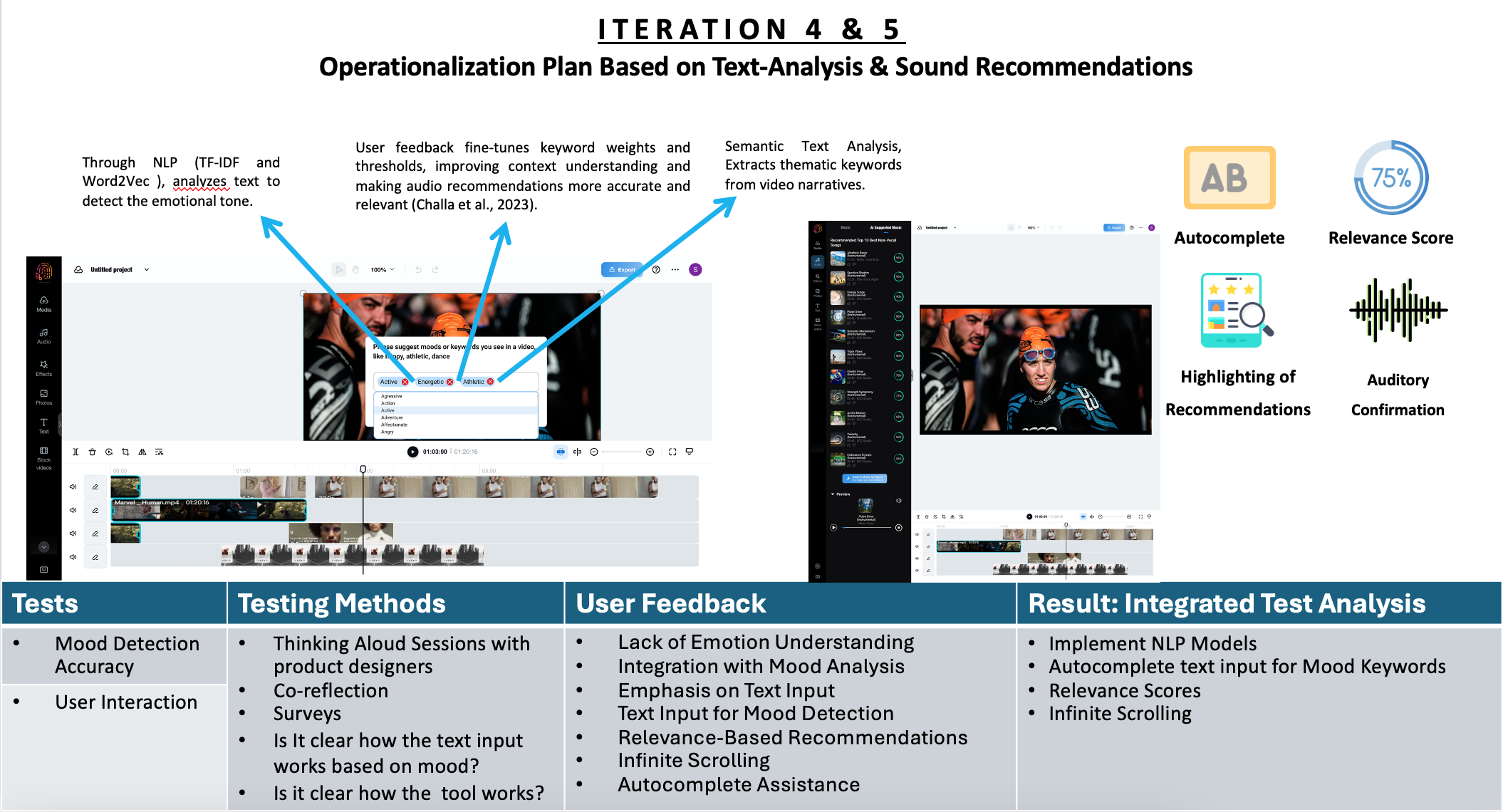

- Shift to NLP Models: Transitioned to NLP models, focusing on TF-IDF and Word2Vec for mood detection based on text analysis. This approach significantly improved the alignment of soundtracks with video moods.

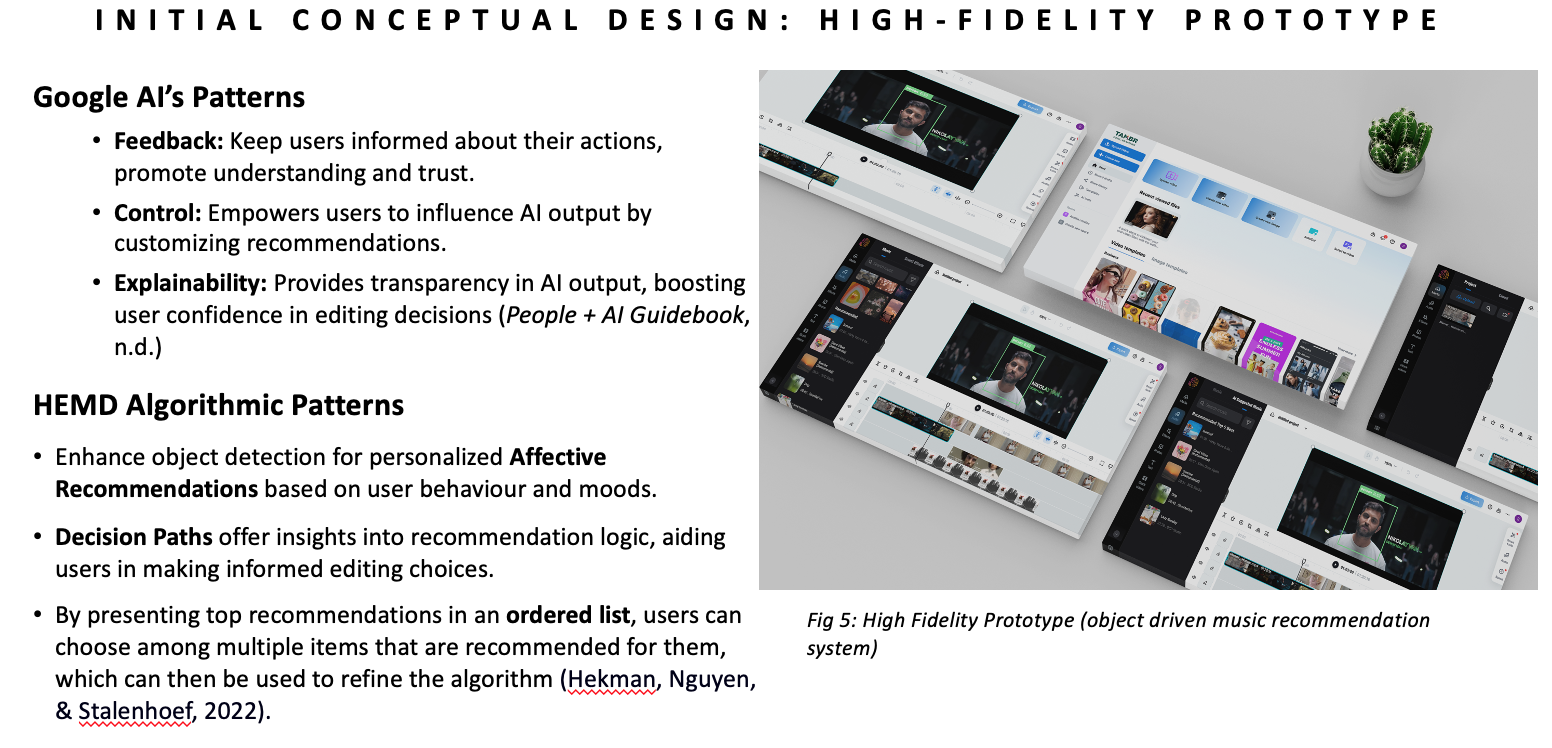

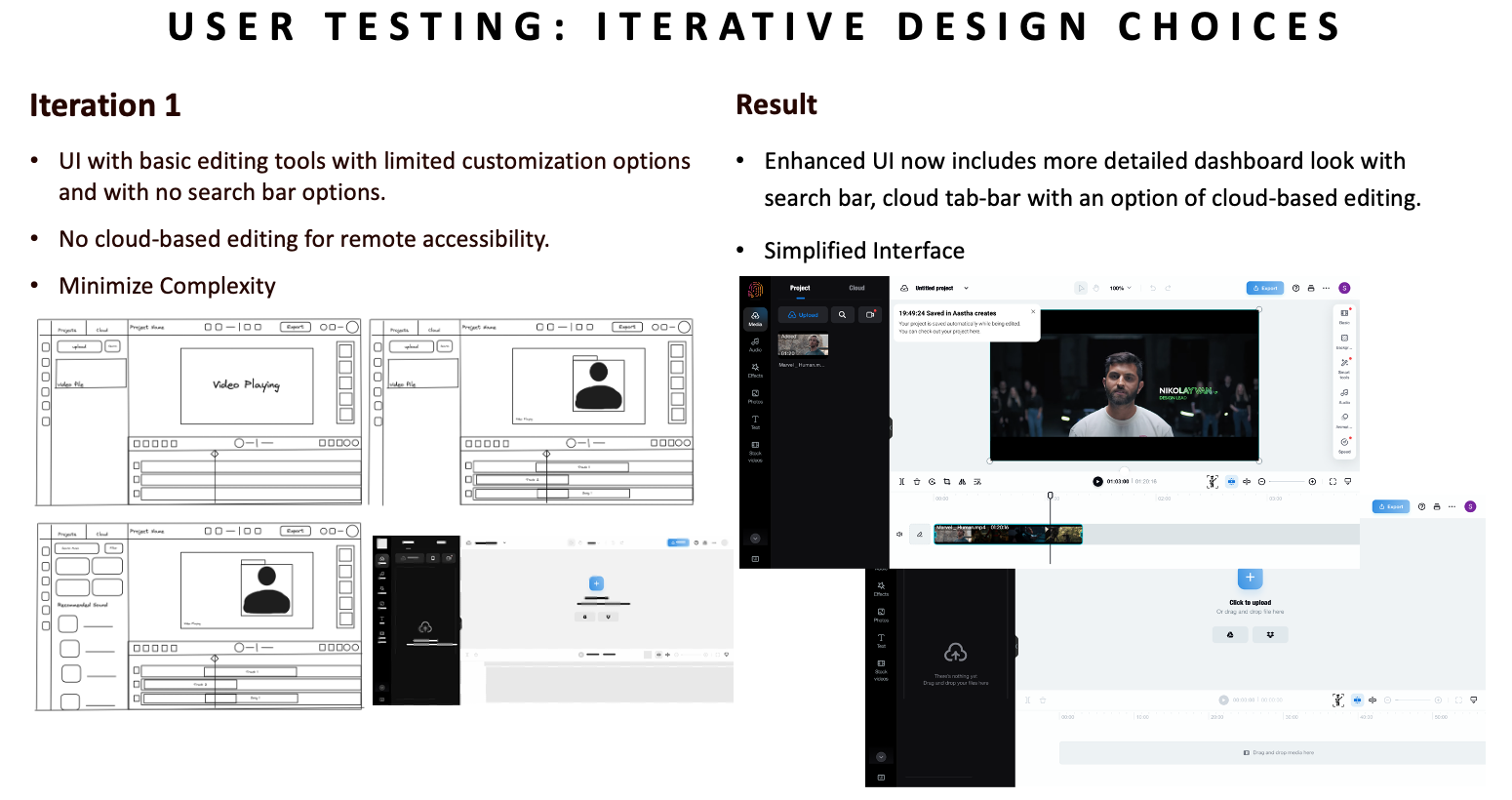

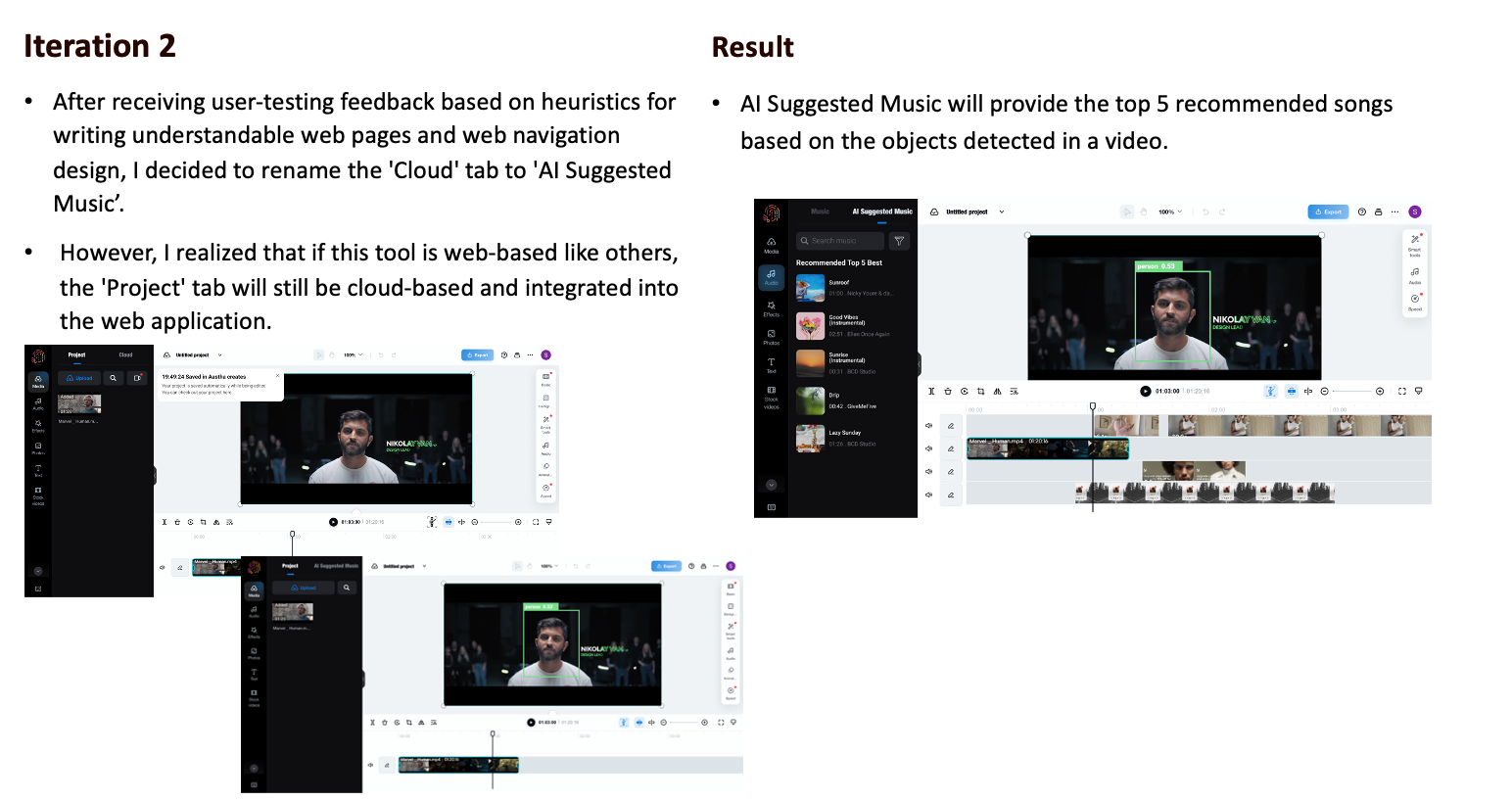

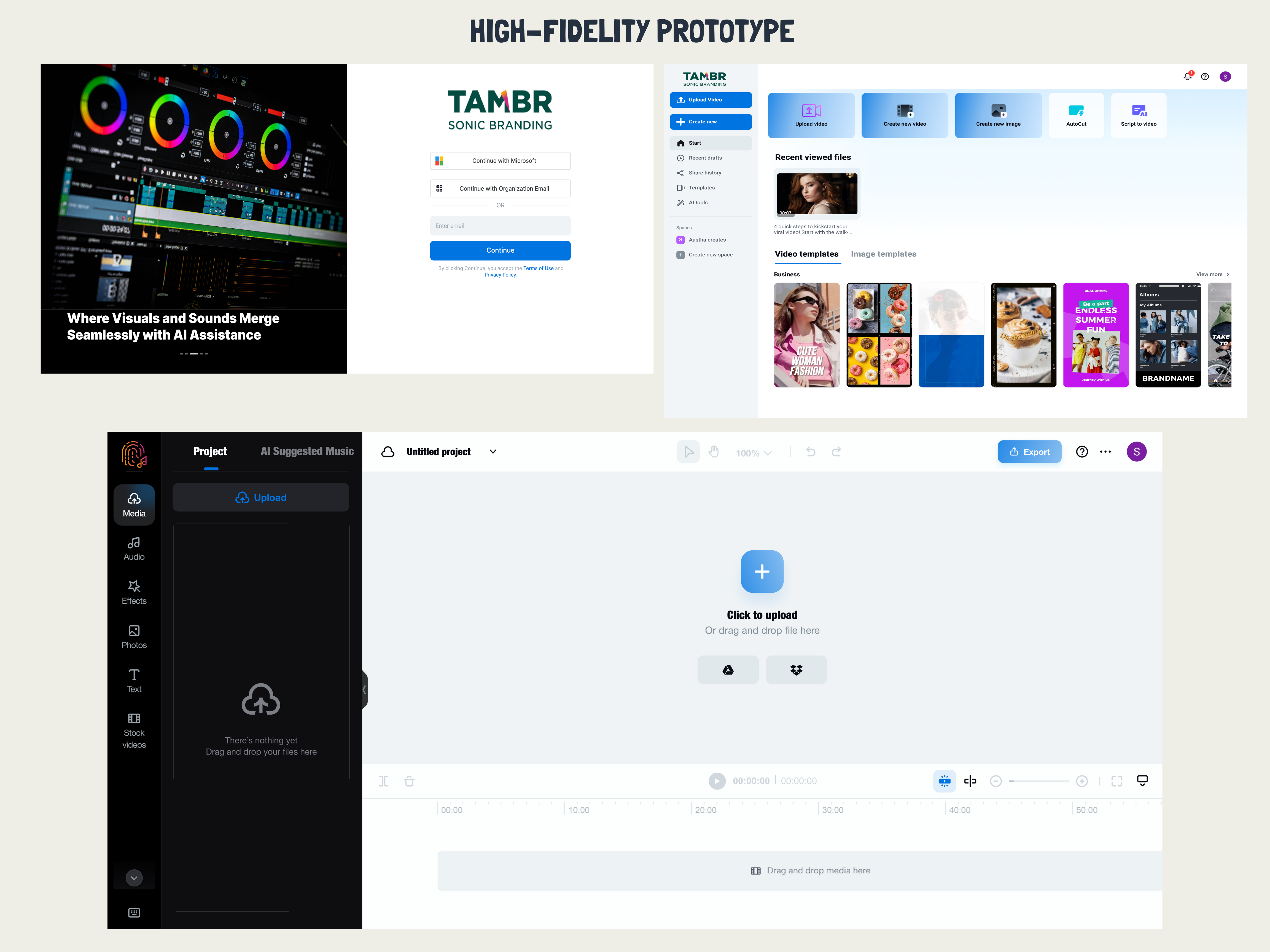

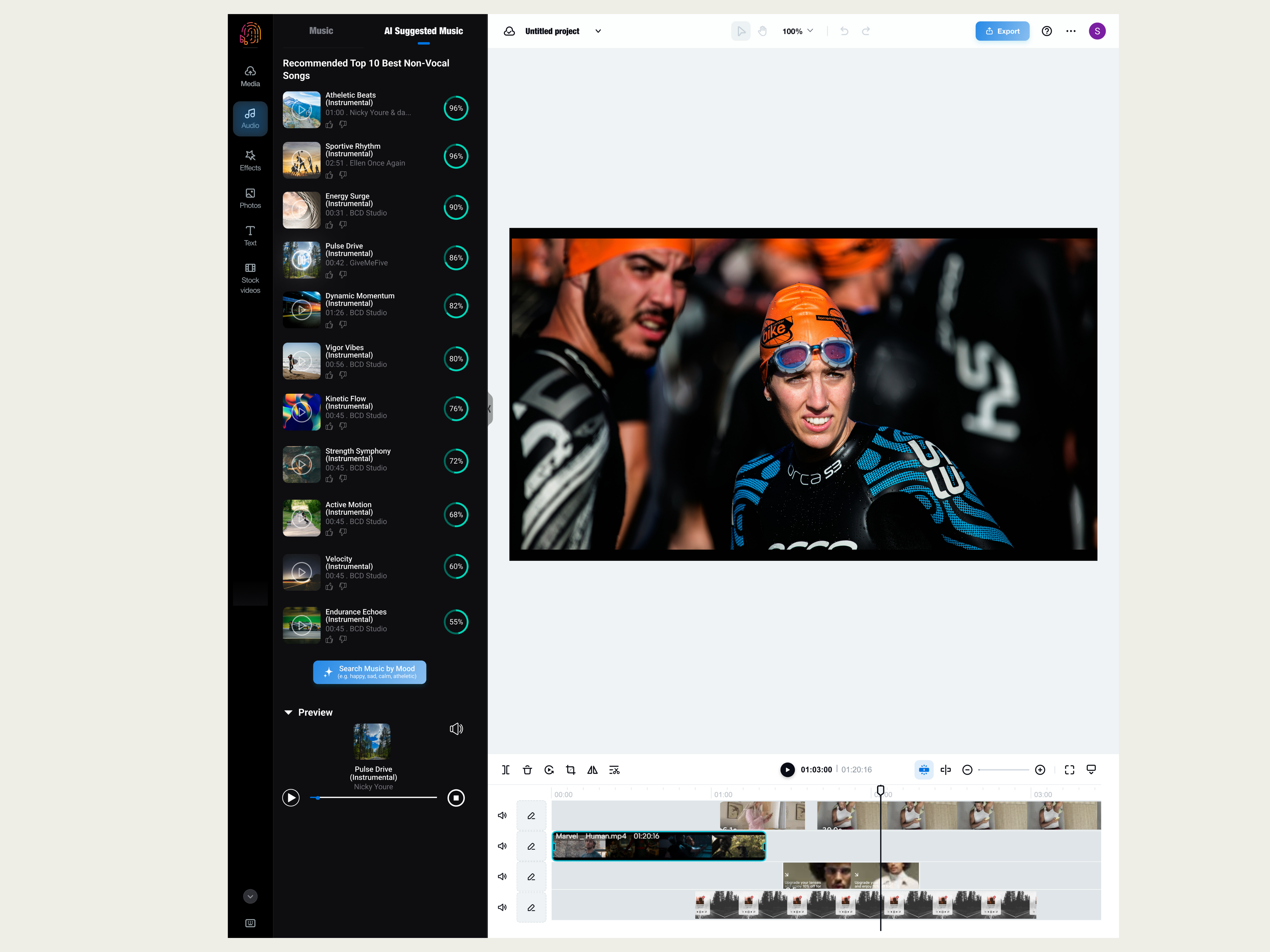

- Iterative Prototyping: Developed high-fidelity prototypes featuring user-friendly text input fields, autocomplete suggestions, relevance percentage displays, and infinite scrolling.

- High-Quality Visuals:

- Wireframes and low-fidelity prototypes iteratively tested and refined based on feedback.

- Final high-fidelity prototypes showcasing the tool’s interface and functionality.

Methodology

1. Collection and Pre-processing:

- Loaded and cleaned the dataset from a CSV file using pandas.

- Combined relevant columns for keyword analysis.

- Generated TF-IDF vectors and Word2Vec embeddings for the combined keywords.

2. Model Development:

- TF-IDF: Simplifies mood detection by processing user-input keywords and comparing them with music metadata to calculate similarity scores.

- Word2Vec: Converts words into dense numerical vectors, capturing semantic relationships and contextual meanings.

3. User Testing and Feedback:

- Conducted surveys, interviews, and thinking aloud sessions to gather user feedback.

- Utilized heuristic evaluations and peer reviews to refine prototypes.

4. Ethical Considerations:

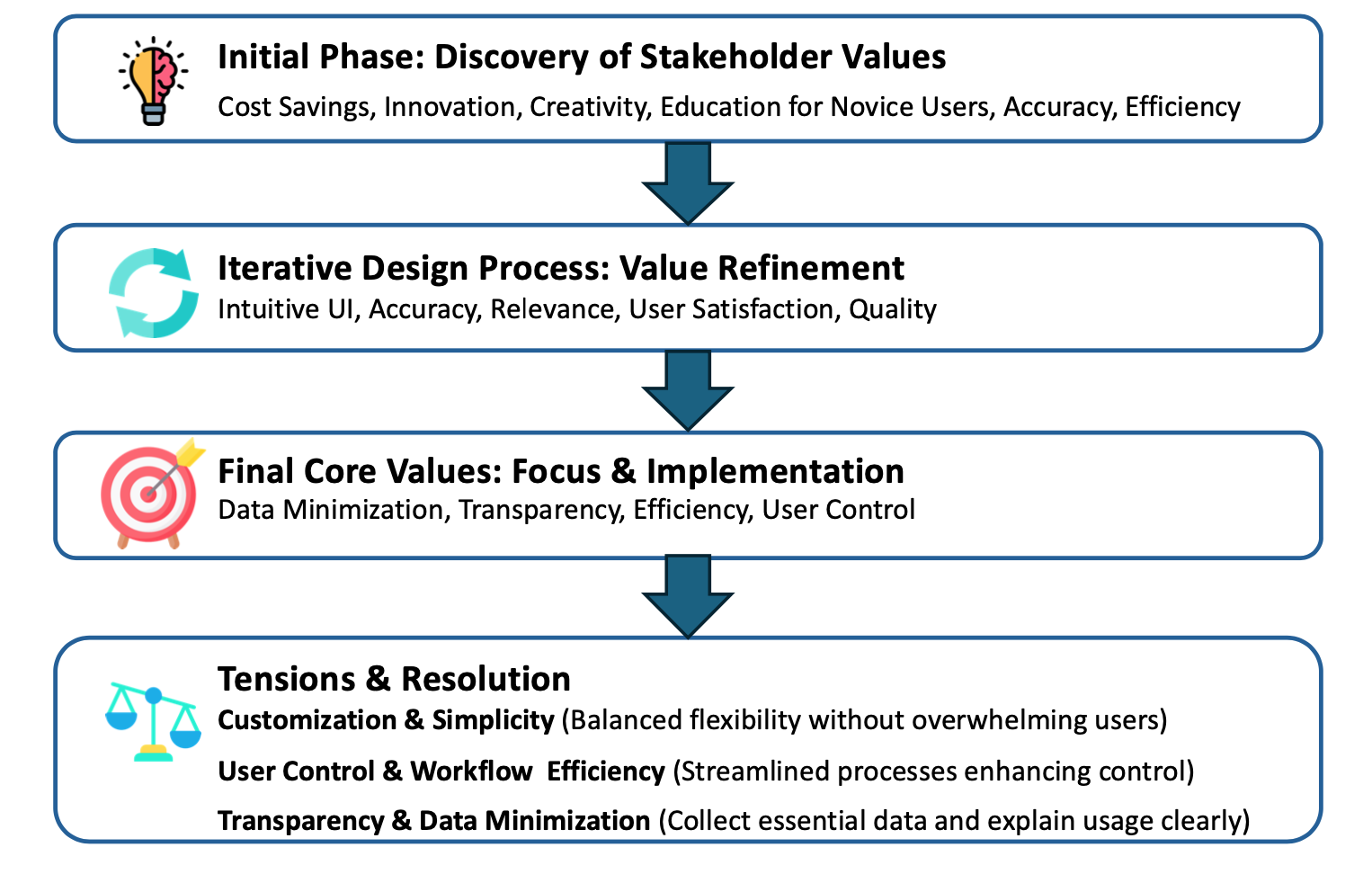

- Ensured transparency, user control, efficiency, and data minimization.

- Adhered to ethical principles throughout the design process.

Technical Report Highlights:

- Detailed explanation of the models used, data pre-processing steps, and performance metrics.

- Integration of user feedback into model refinement and UI enhancements.

Impact & Results

The project led to significant user experience improvements, notably enhancing user satisfaction through the efficient and accurate audio recommendations.

The interface was simplified, incorporating intuitive features such as autocomplete suggestions and relevance scores, making the tool more accessible and user-friendly.

Collaboration with stakeholders was a key component of the process, ensuring that the tool not only met their needs but also adhered to ethical standards.

Regular feedback loops were established, allowing for continuous refinement of the tool's functionality and usability.

Moreover, the project highlighted the innovative potential of text-based mood detection in the realm of video content creation.

It created substantial value for users by providing a robust solution that addresses the emotional aspects of audio-video synchronization, ultimately enhancing the overall creative process.

Overview of Mood-Based Audio Recommendations Using NLP for Video Content

Reflection & Future Directions

The project encountered and overcame several challenges, including the initial limitations of object detection, which were successfully addressed by shifting to more sophisticated NLP models.

The subjectivity and complexity of mood detection in speech presented another significant hurdle, which was mitigated through iterative testing and continuous user feedback.

Iterative learning, each test, feedback, and revision cycle improved the product and deepened my understanding of user needs and design principles. This adaptability allowed me to refine the tool in unexpected ways.

Adapting to Insights, shifting from object detection to NLP for mood detection improved emotional context and compliance, highlighting the need for adaptability.

Looking ahead, there are plans to enhance the tool by integrating additional data types such as audio features and visual cues, by analyzing how emotions changes across in different video genres for better music matches, which could further improve the accuracy and relevance of recommendations.

Exploring advanced embedding techniques like BERT is also on the agenda, aiming to achieve a deeper contextual understanding within the tool.

On a broader scale, the project prompted an analysis of the societal impacts of datafication and automation, particularly concerning jobs in video production.

This reflection underscored the importance of supporting human expertise with AI-driven tools, ensuring that technology serves to augment rather than replace the creative process.

Conclusion

The "Optimizing Mood-Based Audio Recommendations for Video Content Using Text Analysis" project showcases the successful application of NLP and machine learning to enhance the creative process in video content creation. By prioritizing user experience, ethical considerations, and stakeholder collaboration, this project highlights the transformative potential of data-driven design in the evolving multimedia landscape.